Begun, the deepfake wars have.

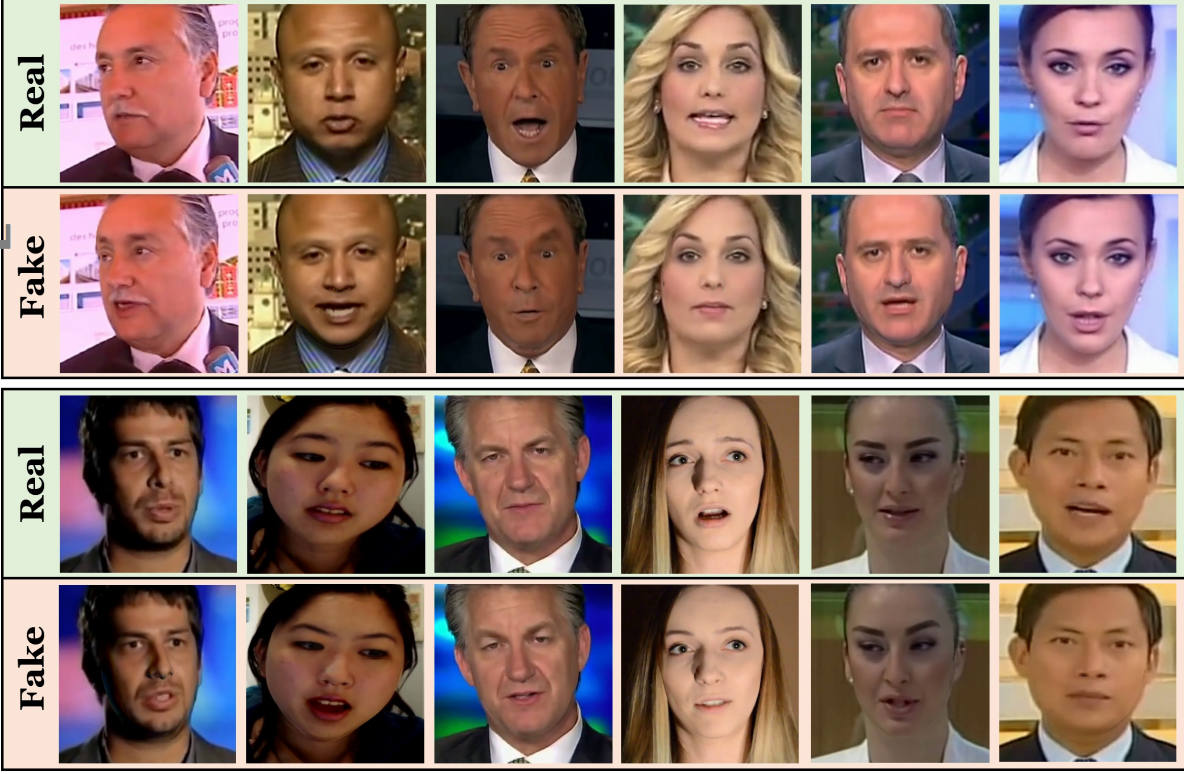

As usage grows of FakeApp -- the software that makes it comparatively easy to create "deepfaked" face-swapped videos -- a couple of researchers have decided to fight fire with fire. So they trained a deep-learning neural net on tons of examples of deepfaked videos, and produced a model that's better than any previous automated technique at spotting hoaxery. (Their paper documenting the work is here.)

This is good, obviously, though as you might imagine the very techniques they're using here could themselves be employed to produce better deepfakes. Technology!

As MIT Tech Review reports ...

The results are impressive. XceptionNet clearly outperforms other techniques in spotting videos that have been manipulated, even when the videos have been compressed, which makes the task significantly harder. “We set a strong baseline of results for detecting a facial manipulation with modern deep-learning architectures,” say Rossler and co.That should make it easier to spot forged videos as they are uploaded to the web. But the team is well aware of the cat-and-mouse nature of forgery detection: as soon as a new detection technique emerges, the race begins to find a way to fool it.

Rossler and co have a natural head start since they developed XceptionNet. So they use it to spot the telltale signs that a video has been manipulated and then use this information to refine the forgery, making it even harder to detect.

It turns out that this process improves the visual quality of the forgery but does not have much effect on XceptionNet’s ability to detect it. “Our refiner mainly improves visual quality, but it only slightly encumbers forgery detection for deep-learning method trained exactly on the forged output data,” they say.